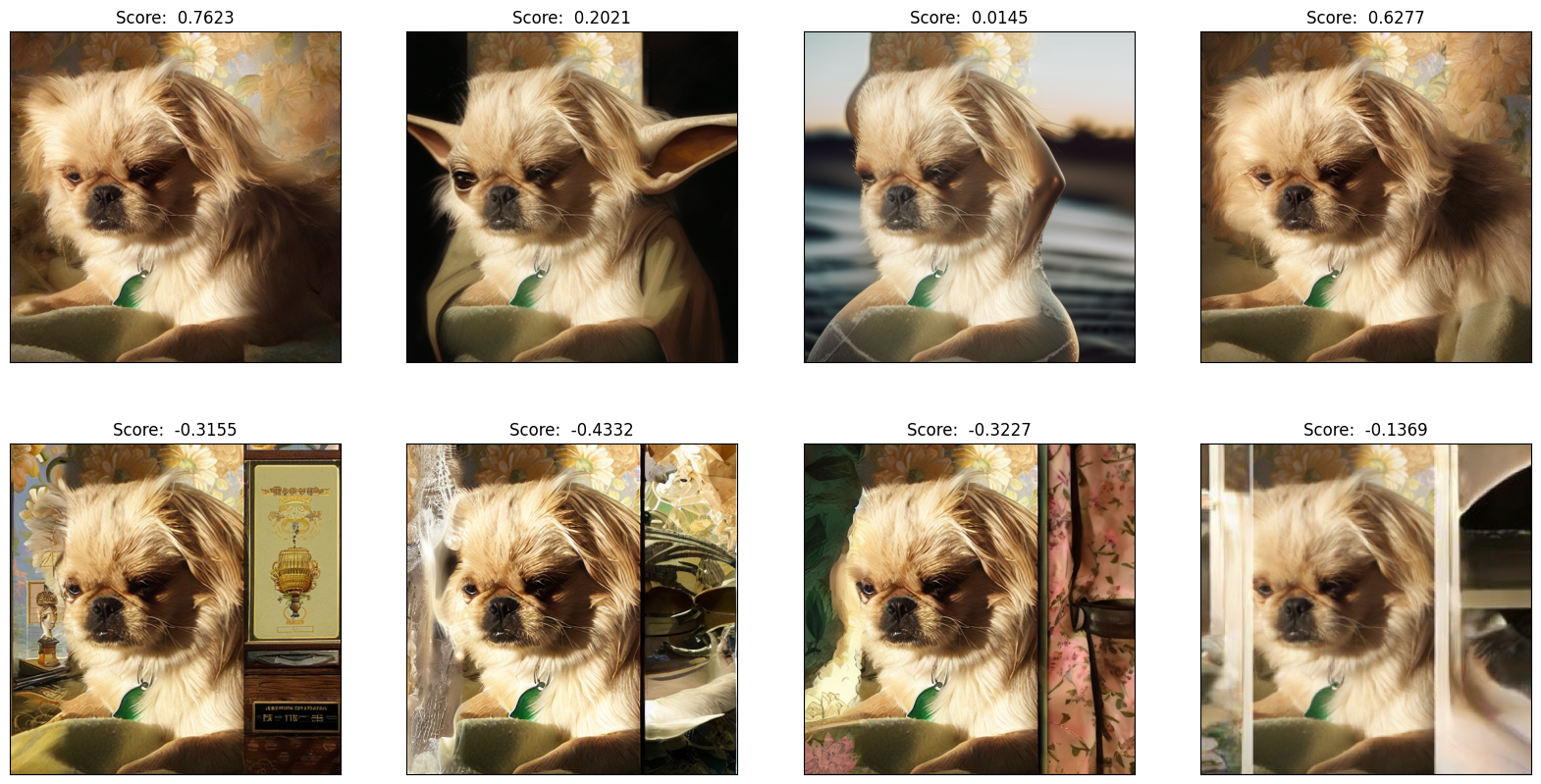

In this paper, we make the first attempt to align diffusion models for image inpainting with human aesthetic standards via a reinforcement learning framework, significantly improving the quality and visual appeal of inpainted images. Specifically, instead of directly measuring the divergence with paired images, we train a reward model with the dataset we construct, consisting of nearly 51,000 images annotated with human preferences. Then, we adopt a reinforcement learning process to fine-tune the distribution of a pre-trained diffusion model for image inpainting in the direction of higher reward. Moreover, we theoretically deduce the upper bound on the error of the reward model, which illustrates the potential confidence of reward estimation throughout the reinforcement alignment process, thereby facilitating accurate regularization. Extensive experiments on inpainting comparison and downstream tasks, such as image extension and 3D reconstruction, demonstrate the effectiveness of our approach, showing significant improvements in the alignment of inpainted images with human preference compared with state-of-the-art methods. This research not only advances the field of image inpainting but also provides a framework for incorporating human preference into the iterative refinement of generative models based on modeling reward accuracy, with broad implications for the design of visually driven AI applications.

Click the button below the image to view different sampling results.

Novel view synthesis on KITTI dataset. For each scene, we give the warping prompt, which is warped from the given view, with holes/missing regions. We inpaint the result from given prompt and synthesize novel view. Note that for the synthesized novel view, there is no ground-truth available.

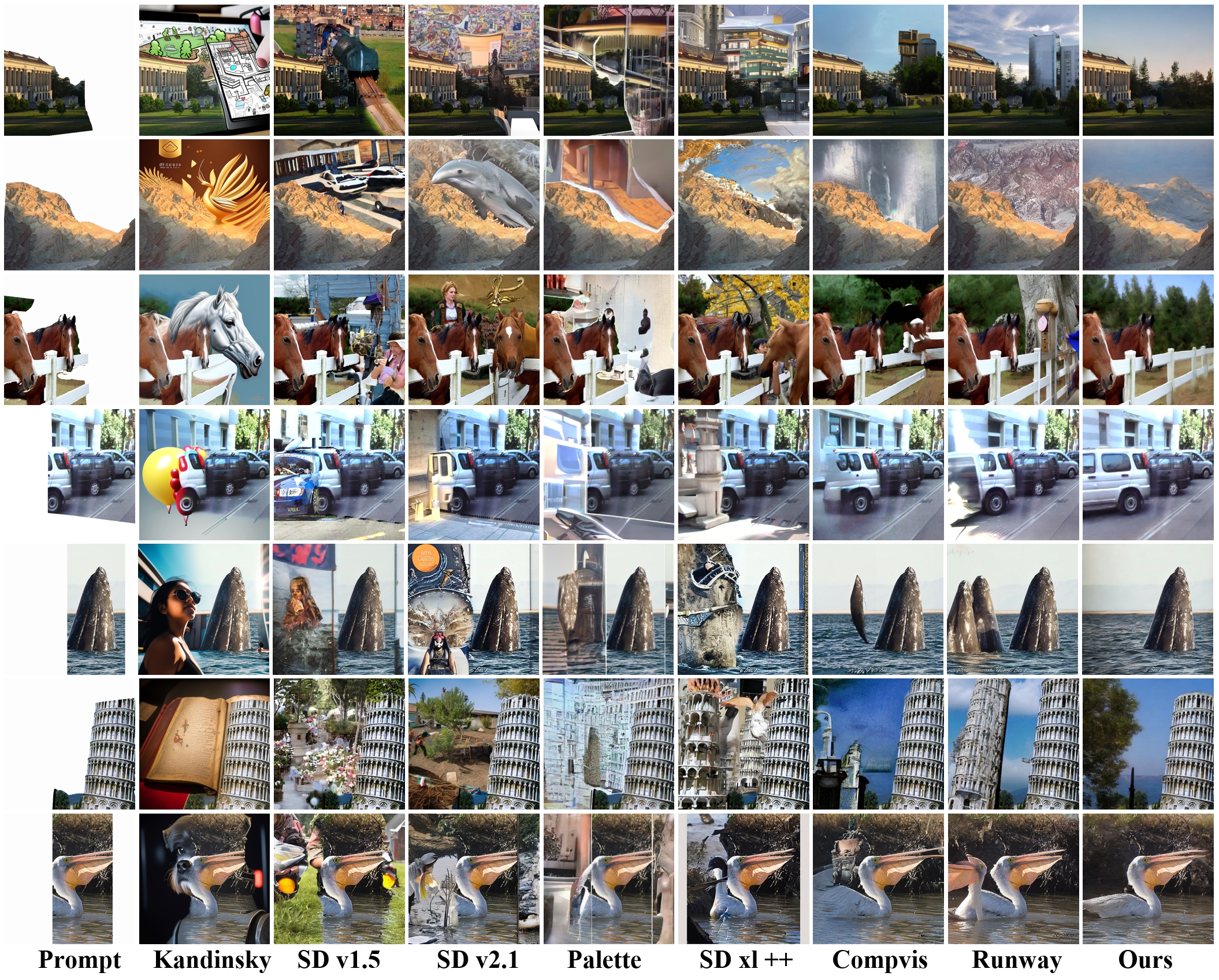

Visual comparisons of our approach and SOTA methods. The prompted images of fifth and seventh rows are generated by boundary cropping, while the remaining rows by warping. All images were generated with the same random seeds.

@article{liu2024prefpaint,

title={PrefPaint: Aligning Image Inpainting Diffusion Model with Human Preference},

author={Liu, Kendong and Zhu, Zhiyu and Li, Chuanhao and Liu, Hui and Zeng, Huanqiang and Hou, Junhui},

journal={arXiv preprint arXiv:2410.21966},

year={2024}

}